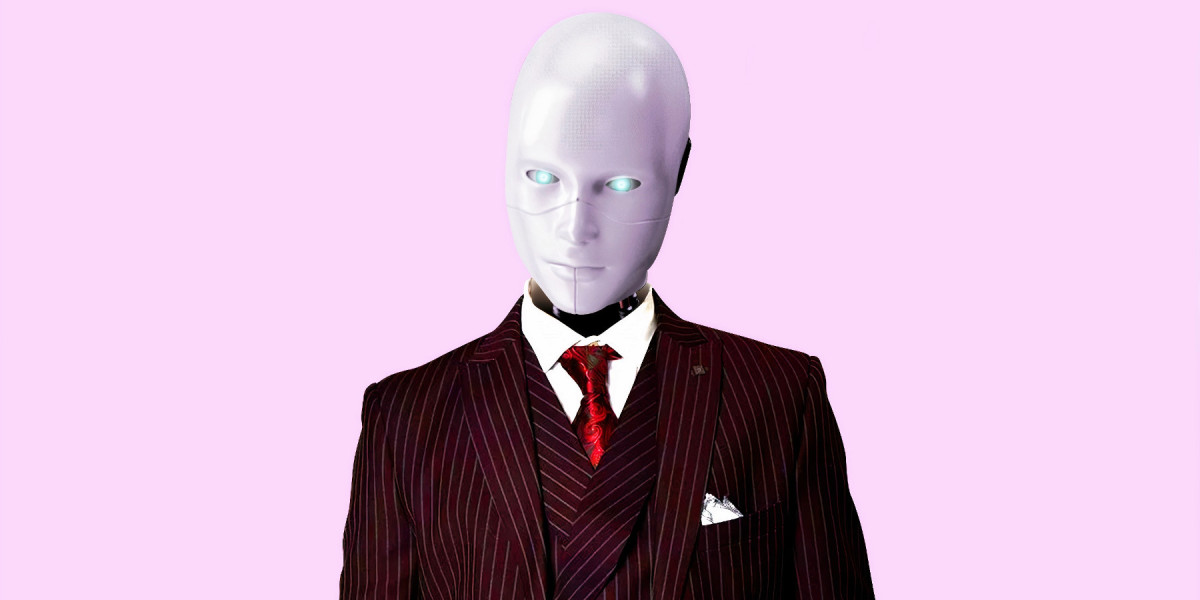

According to a study published today through researchers from the University of Cambridge, representations of synthetic intelligence in popular culture as mainly white can have a number of consequences, as well as erasing other non-white people. The authors argue that standardizing the main representations of AI as a target can influence those who aspire to enter the synthetic intelligence box, as well as managers who make hiring decisions, and can have serious repercussions. They say whiteness is not thought of as an undeniable AI assistant with a stereotypical white voice or a robot with white lines, but as the absence of color, the default white remedy.

“We argue that racialized AI as white allows other people of color to be completely erased from white utopian images,” reads in the article titled “The Whiteness of AI,” which was accepted for publication through the journal Philosophy and Technology. “The strength of the symptoms and symbols of whiteness lies largely in the fact that they go unnoticed and undisputed, hidden through the myth of color blindness. As researchers such as Jessie Daniels and Safiya Noble pointed out, this myth of color blindness is prevalent in Silicon Valley and the surrounding technological culture, where it serves to avoid serious questions about the racial framework.

The authors of the paper are Stephen Cave, executive director of the Leverhulme Center for the future of intelligence and principal investigator Kanta Dihal. The Cambridge-based organisation also consists of oxford university, Imperial College London and the University of California, Berkeley. Cave and Dihal document incredibly white AI constitutions in inventory photographs used to form synthetic intelligence in the media, in humanoid robots detected on television and in movies, in science fiction dating back more than a century, and in chatbots and virtual assistants. White AI constitutions also prevailed in Google’s search effects for the “synthetic intelligence robot”.

They warn that a vision of AI as white by default can distort other people’s belief about the dangers and opportunities associated with the proliferation of predictive machines in business and society, leading some to look at the problems exclusively of middle-class whites. Algorithmic bias has been documented in a variety of technologies in recent years, from automatic speech detection systems and popular language models to physical care, lending, housing and facial recognition. Prejudice was discovered for others not only on the basis of race and gender, but also because of occupation, faith and gender identity.

A 2018 study found that most participants had a racial identity of a robot discovered in the color of the outside of the machine, while another 2018 study paper found that study participants involving black, Asian, and white robots were likely to use dehumanizing language when interacting with black and Asian robots twice as many times as.

Exceptions to a white flaw in popular culture come with robots from other racial compositions in recent sci-fi works such as HBO’s Westworld and Channel 4’s Humans series. Another example, the robot Bender Rodríguez of the caricature Futurama, assembled in Mexico, is still expressed through a white actor.

“The Whiteness of AI” debuts after the publication of an article in June through UC Berkeley Ph.D. student Devin Guillory on how to combat the opposite-darkness combat in the AI community. In July, Harvard University researcher Sabelo Mhlambi presented Ubuntu’s moral frameworks to combat discrimination and inequality, and Google DeepMind researchers shared the concept of anticolonial AI, a painting that was also published in the journal Philosophy and Technology. Both paintings protect AI that empowers others rather than strengthening systems of oppression or inequality.

Cave and Dihal call an anti-colonial technique a forward-looking solution in the fight opposed to the challenge of AI whiteness. At the ICML Queer in AI workshop last month, deepmind research scientist Shakir Mohamed and co-author of an anti-colonial article on AI also advised learning queer devices as a means for everyone to bring a more equitable AI bureaucracy to the world.

Today’s paper, along with several of the previous papers, strongly quotes Princeton University Associate Professor Ruha Benjamin and UCLA Associate Professor Safiya Noble.

Cave and Dihal characteristic of the phenomenon of white AI to a human tendency to bestow human qualities on inanimate elements, as well as to the legacy of colonialism in Europe and the United States, which uses claims of superiority to justify oppression. According to them, the prevalence of whiteness in AI also forms some depictions of futuristic sci-fi utopias. “Instead of portraying a post-racial or color-blind future, the authors of those utopias simply take other people of color out of your mind,” they wrote.

Cave and Dihal say whiteness even shapes perceptions of what a robot’s movement would look like, incorporating attributes such as strength and intelligence. “When whites are believed to be overcome by higher beings, these beings do not resemble the races they have presented as inferior. It’s unimaginable for a white audience to be overtaken by machines that are black. It’s more for superlatives of themselves: hyper-male white men like Arnold Schwarzenegger as Terminator, or hyperfemenine white women like Alicia Vikander as Ava in Ex Machina,” the paper says. “That’s why even the stories of an AI uprising that are obviously based on stories of slave rebellions describe rebellious AIs as targets.”

More research is needed on the effect of whiteness on the box of synthetic intelligence, the authors said.

In other recent news about the intersection of ethics and AI, a bill brought to the U.S. Senate this week through Bernie Sanders (I-VT) and Jeff Merkley (D-OR) would require the consent of personal corporations to collect biometric knowledge used to create technologies such as facial popularity or voiceprints to customize with AI assistants. Array as a team of researchers from Element AI and Stanford University suggests that college researchers prevent Amazon Mechanical Turk from creating AI assistants that are most useful in practice. Last week, Google AI launched its style map style for others to temporarily take a popular approach to detailing the content of knowledge sets.