Decisions about the content and moderation of generation corporations are amid endless kicking and screaming, to the point that it’s now necessarily a cross-detail for at least one of The major American political parties. The likes of Facebook, Twitter and Google also seek to convince the public of how much they are dealing with the avalanche of misinformation and hateful content that they have received help in posting by redirecting attention to the niche organization they have banned in recent days, or presenting questionable internal statistics as evidence.

So we review new formats to circulate and summarize some of those advances with a continuous context on a semi-recurring basis in the interest of intellectual aptitude of all (and perhaps without ceding the maximum weight to each swing acircular the merry-go -circular.).

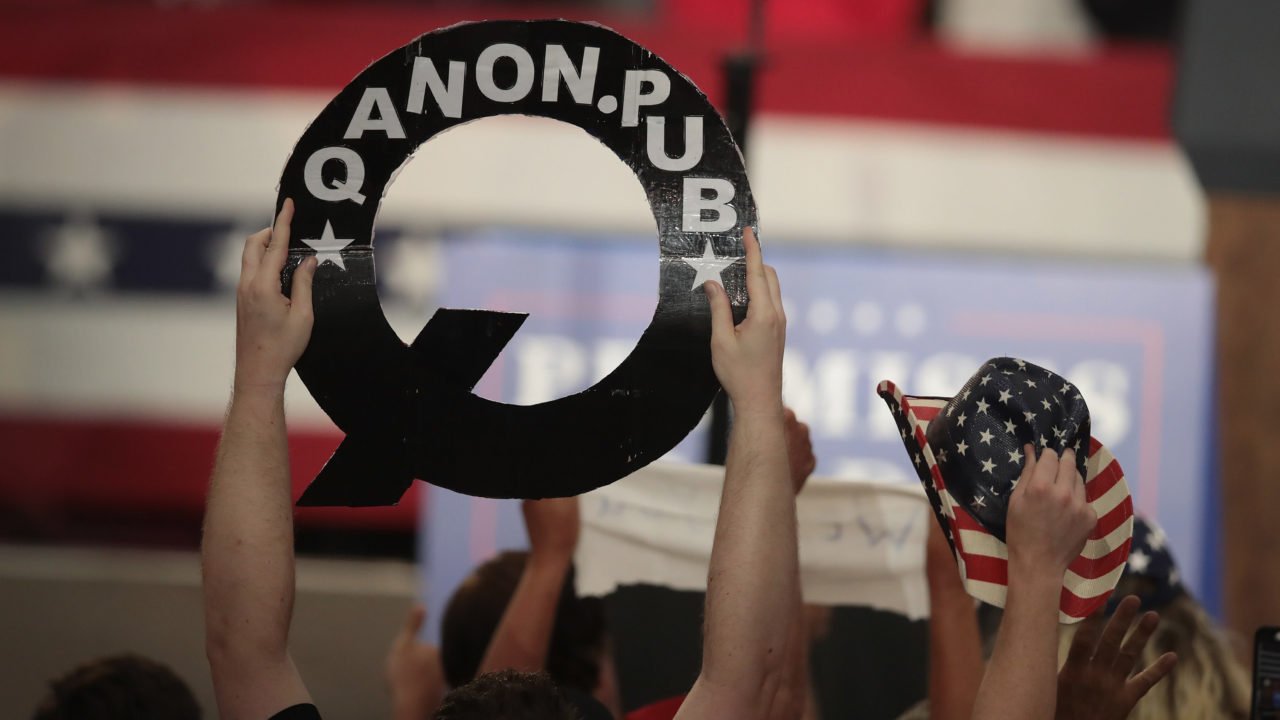

Earlier this month, Facebook announced that it had banned one of the largest QAnon teams on the site, a move that coincided with a growing awareness of the scale of QAnon’s activity on the site. Days later, NBC News reports and the Guardian’s report reported that ads classified on places on Facebook and Instagram teams and pages, which together had millions of followers, were left intact even as Facebook promoted the ban as evidence that it was doing something. According to NBC, an internal research on Facebook also showed that the company had sold 185 pro-QAnon classified ads that had generated 4 million impressions last month.

This week, Facebook expanded its policy of extremism to ban activities “related to offline anarchist teams that help acts of violence amid demonstrations, defense force organizations founded in the United States and QAnon.” (Not all QAnon-related content is prohibited, just call or speculate about violence). The company said thousands of teams and pages had been ejected from Facebook and Instagram under the expanded rules, although this mitigated the blow: many anti-fascist teams, an eternal sack man for right-handers who insist on opposing evidence that they incite violent demonstrations, also won the spoils as a component of some kind of balance.

Facebook needs to have the credits for fighting QAnon, despite the explosion of its popularity thanks to the site’s own expansion algorithms. The cat is already out of the bag; Donald Trump backed the QAnon motion this week, and a Facebook spokesman stated that the company did not think it was “changing a transfer and that it might not be a discussion in a week.”

Facebook, YouTube, Twitter and even LinkedIn have been controlled to collect and curb the spread of a sequel to Plandemic, a misleading documentary about the new coronavirus from which they failed to prevent you from gaining millions of perspectives in May. (Unfortunately, the sequel is titled Plandemic: Endoctornation.) Facebook prevents users from linking to the video, while Twitter hid it as a warning screen. The video was unsuccessful in the momentum of its predecessor.

However, the main explanation for why this immediate action turns out to be that its manufacturers have strongly encouraged its release, thus giving a warning to the sites. Meanwhile, a report through human rights organization Avaaz estimates that Facebook has generated at least 3.8 billion perspectives on lies, misinformation and dog deceptions about coronaviruses in the pandemic.

The TikTok short video app, which the Trump administrator threatens to ban lately, said this week that he had created an online page and a Twitter account to report incorrect information that no one will read. Facebook said it plans to install a “viral breaker” that would restrict the spread of rising posts, leaving time for its moderators. (Facebook discreetly presses those mods to treat right-wing content preferentially, and their history of early reports of incorrect information is appalling)..

A group of right-wing experts has asked the Federal Electoral Commission to investigate why “Facebook, Twitter, Instagram, Uber, Lyft, PayPal, Venmo and Medium” have overturned bans on the Islamist conspiracy theo theory Laura Loomer now that she is a Republican candidate for Congress. Florida. Running for Congress is at least the third trick Loomer did to recover his Twitter account, counting a failed lawsuit and the moment he handcuffed himself to the company’s offices in New York.

Advertising firm Ogilvy infuriates presenters on Twitch’s live streaming platform by encouraging broadcasters to stream Burger King menu readings from text to speech to replace chump; Twitch did not tell Gizmodo’s sister site, Kotaku, if this violated advertising rules.

Reddit claims to have noticed significant minimization (18%) of hateful content after banning nearly 7,000 sub-reddits concerned about hate speech and harassment.

Facebook is still looking to come up with a plan to respond to Donald Trump’s inevitable attempt to discredit the effects of the 2020 election without getting too angry at the Conservatives. According to the New York Times, they may have developed a label that reminds users that the effects of elections have not yet been finalized on articles that falsely allege voter fraud and an “automatic switch” that would disable classified election ads after the election. Brain confidence at work, people:

… Leaders discussed the “death switch” for political publicity, according to two employees, that would disable political classified ads after November 3 if the outcome of the election is not transparent without delay or if Trump defies the results.

Discussions remain smooth and it is unclear whether Facebook will stick to the plan, three other people close to the discussions said.

Trump, for what it’s worth, won’t get the trail and is asking the Supreme Court to overs crack down on a federal appeals court that he can’t block a complaint on Twitter.