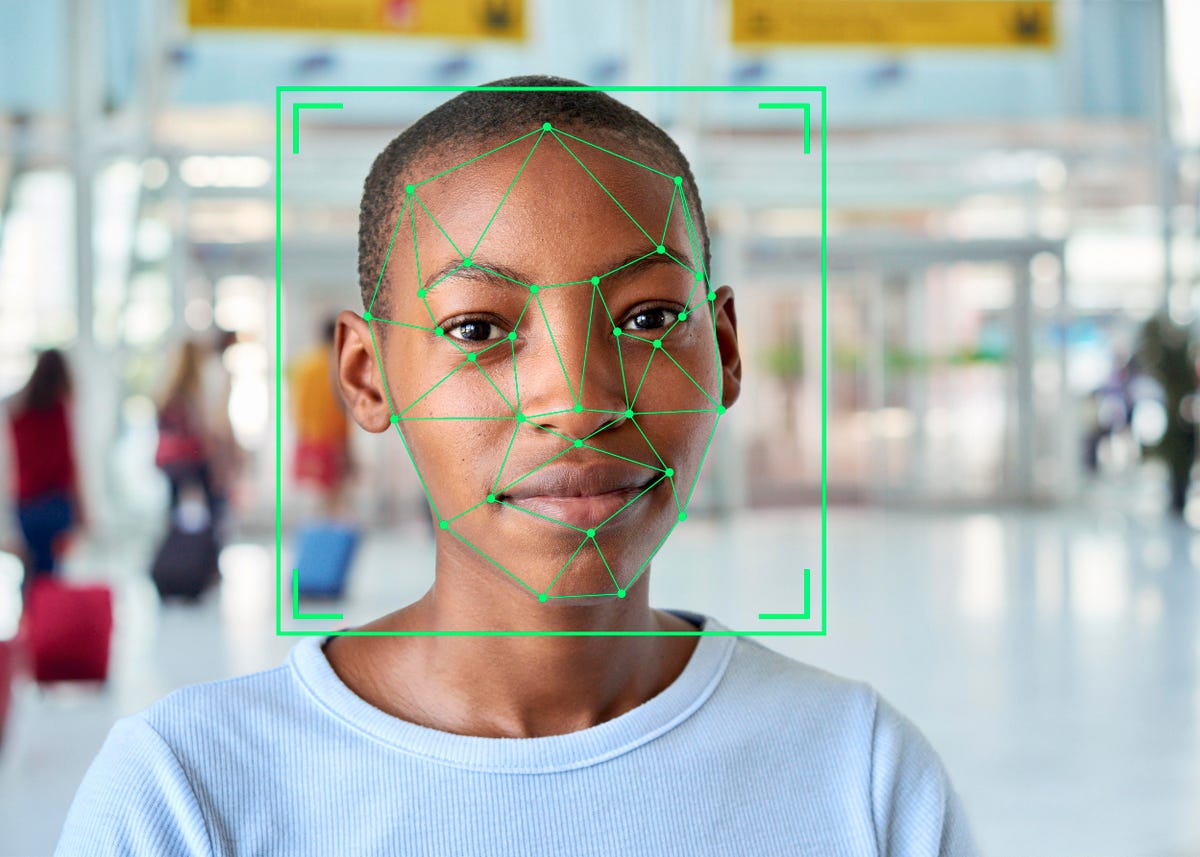

As a strong advocate of proper regulation of facial popularity technology, Microsoft has quietly gotten rid of its MS Celeb database, which comprises more than 10 million images. The images compiled included journalists, artists, musicians, activists, legislators, writers and researchers. Even though it all ends its role in the possibility of abuse of facial popularity technology, which could lead to incidents of racial profiling.

The distribution you want to know

Launched in 2016, the database consisted of online photos with 100,000 known people. Microsoft explained the removal of the dataset to the Financial Times as an undeniable matter of internal corporate protocol, “the site was for educational purposes,” the company said. run through a worker who is no longer at Microsoft”.

The removal comes after Microsoft urged U. S. politicians to increase popularity systems last year. In addition, he urged governments around the world to use facial popularity generation. CultureBanx noted that the software giant must ensure that the generation has a superior error. It does not invade privacy or become a tool of discrimination or surveillance.

Microsoft even blocked sales of its facial popularity generation to California police departments that wanted to use it on cameras and cars. Not to mention, studies show that business AI systems tend to have higher error rates for women and blacks. popularity systems would confuse light-skinned men only 0. 8% of the time and would have a 34. 7% error rate for dark-skinned women.

The alarm goes off

Last December, Microsoft President Brad Smith wrote on the company’s online page about the importance of avoiding the generation of facial popularity by illegal discrimination. He noted that it is vital that facility teams perceive that they are “not exempt from their legal responsibility to comply with legislation prohibiting discrimination against individual consumers or consumer equipment. “

Tech corporations like Microsoft and Google have sounded the alarm about the harm of synthetic intelligence to investors and logos. Specifically, last December, Microsoft wrote that “AI algorithms would possibly be flawed. The datasets would possibly be inadequate or involve biased information. If allowing or offering AI responses that are debatable because they have an effect on human rights, privacy, employment, or other social issues, we would possibly suffer damage to the logo or reputation.

Remember that artificial intelligence systems are informed by the nature of what they are “taught. “The use of facial popularity generation has a different effect on other people of color, depriving an organization already facing inequality. Given that Microsoft and Google have been operating on A. I. for years, we have to ask ourselves why they really started denouncing their potentially destructive effect and when the rest of the industry will too.

In addition, Microsoft and Google are the only ones concerned about the misuse of facial popularity services. The ACLU has called corporations like Amazon with its “Rekognition” tool that has been implemented in law enforcement departments in the United States, as a risk to civil liberties. Meanwhile, Democratic lawmakers have called for promises that police departments with Rekognition won’t abuse their power.

Some researchers claim that although MS Celeb was removed, you can still find its shared content online. Essentially, which means that once it appears on the website and other people download the information, it never goes unnoticed.